Computer Vision Training

Helicopter detection with YOLOv8.

This post is a follow-up to my first look at YOLOv5 object detection, where I noticed the model excelling with person detection. The pre-trained categories in the COCO dataset include a wide range of objects to encounter in daily life, providing a good foundation to recognize the potential of computer vision. One of the clips I had tested the model on was the scene from Jurassic Park, the Journey to the Island, where there's a series of shots with a helicopter, and of which there was not a pre-trained category. All frames with a helicopter were mis-categorized or unrecognized by the base model with the COCO dataset. I saw this as an opportunity to train—and learn to train—a computer vision model, on a single category.

As an update to YOLOv5, YOLOv8 is now available, which is more effective and efficient in its use of resources. Performance-optimized, I opted to use the latest model for my training project. The steps to complete the project were as follows: assemble a dataset, label the dataset images, train the model, and test the model.

Assemble a Dataset

I sourced the dataset from Google Images and pulling frames from a series of high-budget motion pictures. Ultimately, I had 158 images, which is considered a small dataset for machine learning. These images included various lighting conditions, whole or partial views of the object at hand, disparate angles, and a range of background. Variety is key in assembling an effective dataset for model training.

Label the Dataset

I used Label Studio, which provides an effective browser experience for bounding box labeling of images in the dataset. The set was painstakingly categorized four different times, as my training iterations proved difficult. On my first run-through, I drew bounding boxes around the entirety of the helicopter—including the blades. Not all images had rotors, leading to inconsistency in training the model. A second and third labeling included resizing all images to a square ratio, as I wondered about the distortion introduced during model training and its lack of consistency across the dataset. A fourth and final labeling created bounding boxes around the body of the aircraft only—not including the rotors. The dataset was downloaded in YOLO format.

Train the Model

I explored the potential of using AWS Spot Instances for model training—hoping to use YOLOv8x, which is the highest-tiered model of the set—to obtain optimal results. However, the Spot Instances were unavailable given my requirements, including region, GPU considerations, AMI specifics, and NVIDIA + PyTorch needs. I evaluated an On-Demand instance, but it was beyond my first-year free-tier AWS account needs. I had two support tickets with AWS, and I was impressed by their response times and attention to the detail of my nominal—if not insignificant—use case.

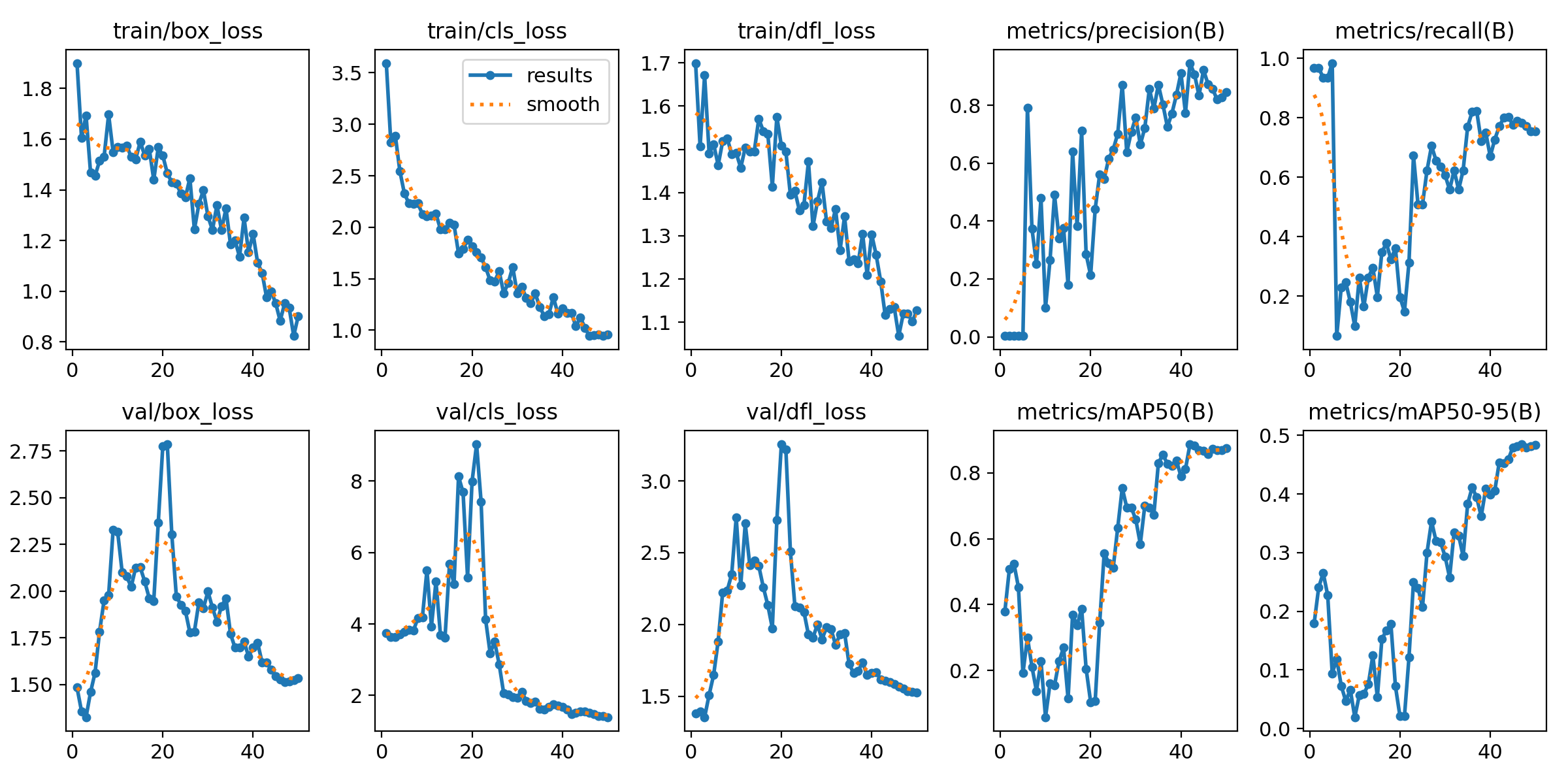

Ultimately, the training was done on my MacBook Air as a CPU-only process. I used YOLOv8n and obtained usable results, without which I would not be reporting my progress. I observed significant decreases across the training metrics of box_loss, cls_loss, and dfl_loss. The precision of detection increased steadily over 50 epochs, which took approximately 45 minutes to complete.

The results are not at the quantitative performance level I would estimate each one of the individual COCO categories is at, as public-ready models. However, for the purposes of single-category model training and detection—and given the limitations of training CPU-only on the nano model—I was pleased with the intermediate success of the detection during testing.

Test the Model

I ran the newly trained best.pt model on a series of scenes from blockbuster films. These included both old and new 007 films, Jurassic Park, The Matrix, and more. The results were agreeable—at least after a week and a half of troubleshooting between correct labeling processes, AWS Spot Instance vs. On-Demand configurations, and local-only CPU training.

Next Steps

This model is simply for the detection of helicopters, where it quickly falls into the "man with hammer" fallacy (if you have a hammer, everything looks like a nail). The real test is to integrate the training of a single category into a larger dataset. Before jumping straight into the COCO dataset—which would amount to 81 total categories with the addition of my helicopter—I plan to test two categories only: person + helicopter. This will be an increasingly complex, but realistic, application of computer vision and my foray into object detection with machine learning.